Our certified and experienced team of technical experts delivers bespoke IT workforce solutions tailored to the unique requirements of the defence and aerospace sectors. Whether it’s network and cloud migration, digital transformation, or cybersecurity, we ensure your IT infrastructure is robust and future-proofed. We are committed to delivering unparalleled IT workforce solutions that empower your business to thrive in the digital age.

Talent Acquisition

LUNIQ connects global companies with top IT talent, leveraging our vast network of industry connections and expertise in sourcing the best contract and permanent professionals. We pride ourselves on providing tailored talent resourcing solutions to drive growth and success in various IT sectors, empowering your organisation with the right people to take your business to the next level.

Cloud Assessments

Unlock the full potential of your cloud infrastructure with LUNIQ’s comprehensive Cloud Assessments. Our expert team evaluates your current setup, identifies optimisation opportunities, and ensures your systems are secure, scalable, and aligned with your strategic goals.

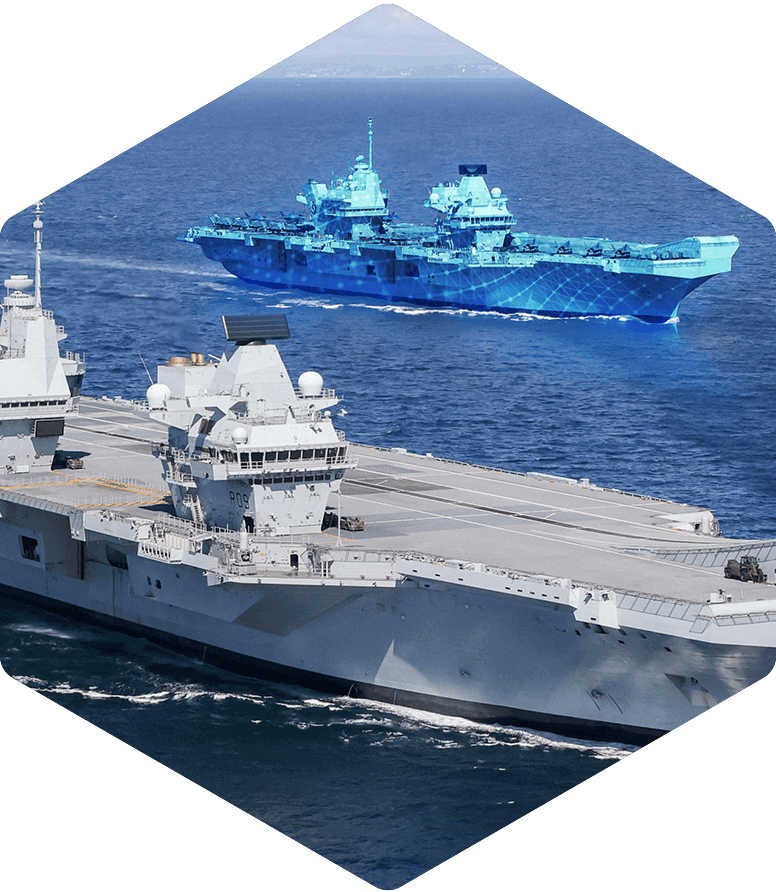

Digital Twin Development

LUNIQ is at the forefront of developing Digital Twin platforms, creating a digital representation of a physical system that accurately simulates real-world data to test prototypes in a virtual environment. Our platforms are customized to meet the unique needs and requirements of our clients, ensuring every aspect of the physical system is accurately simulated for reliable testing and prediction. With a focus on quality and accuracy, we provide fully bespoke solutions that deliver exceptional results for your business.

Digital Workspace

Unlock your organization’s productivity and innovation potential with LUNIQ’s advanced digital workspace solutions. Our bespoke solutions are designed to streamline your IT infrastructure, promote seamless collaboration, and bolster security. Discover enhanced flexibility and unlock the true potential of your workforce with our cutting-edge solutions.

Managed Cybersecurity

Safeguard your organisation with LUNIQ’s comprehensive Managed Cybersecurity solutions. Our expert team provides continuous monitoring and proactive defence strategies, ensuring your systems are fully protected from emerging threats. We tailor our approach to meet your specific needs, keeping your business secure, resilient, and fully compliant with industry standards.

Network Assessments

Optimise your IT infrastructure with LUNIQ’s in-depth Network Assessments. Our experts analyse your entire network to identify vulnerabilities, performance bottlenecks, and areas for improvement. We provide tailored recommendations to enhance security, boost efficiency, and ensure your network is fully aligned with your operational needs.

Operational Support

Streamline your day-to-day operations with LUNIQ’s Operational Support services. Our dedicated team provides ongoing assistance, ensuring your IT systems run smoothly, minimising downtime, and addressing issues before they escalate. We tailor our support to meet your specific requirements, keeping your business running efficiently and securely.

Virtual Security Assessments

Enhance your digital defences with LUNIQ’s Virtual Security Assessments. Our specialised team conducts thorough evaluations of your virtual environments, identifying vulnerabilities and ensuring compliance with the latest security protocols. We provide actionable insights to strengthen your systems, reduce risks, and keep your organisation secure in the evolving digital landscape.

Get in touch

Connect With One of Our Experts

Let’s discuss the challenges your organisation faces.